I.

The arguments that follow are situated within a larger project entitled “Critique of Algorithmic Rationality.” That project, drawing deliberately from Immanuel Kant, is an attempt to move beyond the technological, social or cultural critiques of digital rationalities usually found in social, media and cultural theories and take a critical look at the validity of algorithmic approaches. It explores the limitations of the performance and purview of algorithmic schematics and is therefore grounded in a critique of mathematical reasoning. This investigation explicitly refrains from power analyses of digital surveillance structures and ignores critiques of disciplinary and controlling societies that draw from Michel Foucault and Gilles Deleuze as well as economic analyses of “data capitalist” value creation, which is not to suggest that those analyses are without relevance. At disposition is neither applications nor practical alternatives but— analogous to the Critique of Pure Reason—the limits of algorithmic thought. I speak of “rationality” rather than “reason” in order to highlight the formal and “instrumental” character of this type of operation, which is currently conquering not only the world and reality as a whole, but also our selves, our bodies as well as our thinking, feeling, and knowing. As the same time, it is permeating aesthetic practices of design and creativity, and the idea of “making art” in general. In his defense of the validity of “pure reason” against a program of excessive rationality, Kant of course not only postulated the difference between noumenon and phainomenon, as well as the unknowability of the thing-in-itself, but also explained the same with the a priori synthesis of apperception and concept and—at least in his first (edition A) version of “transcendental deduction”—in particular with the constitutive role of “imagination.” All cognition stems from this synthetic productivity, which in turn is kept in check by the categorization of concepts of understanding. Imagination—in the classic meaning of Pico della Mirandola’s and Marsilio Ficino’s imaginatio—functions in particular as the site of creativity. For Kant, it is the intermediate between perception and understanding; it is responsible for the formation and consolidation of manifold manifestations into a coherent impression, and also for the construction of a general outline or ‘schemata’. In this way, according to Kant, we are first able to become aware of and classify and thus perceive things and objects–one could also speak of their diagramatization, whereby the delineation of the schema involves, not only but mainly, its geometrization and mathematization. At the same time, there is something within the schemata that eludes linearity (the form as medium for a general abstraction), namely the excess of formation itself; for that which gives form, the praxis of design, may be grounded in general principles of construction but occludes its own construction. Every construction of construction defies its own principles, every totalization that also attempts to rule over that which constitutes the rules falls instead into the trap of its own exaggeration.

We will return to this fundamental idea again and again. The core of the imagination of a schema is something other than the schema itself, something which makes schematization possible, but is not an inherent part of it. We might also speak here of an erratic “intuition,” of the phantasia that first creates phenomena, or of an “inspiration” or inspiratio in the literal meaning of inhalation in the sense of ensoulment—“Begeisterung” as formulated by Georg Friedrich Hegel—stressing the eminently passive sense of the word. We can also express this as follows: imagination surpasses all formalism, especially because it is grounded in an Other, an alterity that in turn is captured neither by concept nor by definition but has its roots in a “beyond” that comes to us, that turns us around, and must be caught hold of in order to make us other than we are.

Thus from the onset we are confronted with the problem and the riddle of creativity. The argumentation that follows aims first, always following Kant, to show that creativity—or imagination—is an indispensable moment of all thought, no matter how formal. Second, it endeavors to give creativity its rightful place at the heart of synthesis, and thus of all judgment and cognition as well as all meaning and understanding. Third, in a shift from the Critique of Pure Reason to a Critique of Algorithmic Rationality, it assumes that while creativity is necessary to every form of mathematical proof, computation, formalization, and calculation, it can nevertheless be demonstrated that, fourth, while it makes both mathematical and algorithmic thought possible, it cannot itself be mathematized or algorithmized. My assertion is therefore that there is a fundamental limit to all algorithmics, the litmus test (and not ruler) of which is creativity. Chiefly we shall see that while something new or unprecedented can of course be made by algorithms—which remains unspectacular as long as we have not defined the concept of the new—the concept of creativity is no more equivalent to the production of newness than, conversely, such novelty, when created or invented, can, as this novelty or as a creative invention in the proper sense, be adequately evaluated or judged algorithmically. From that follows, firstly, that the concept of creativity, if it is to be useful for thought and definition or for judging and synthesis, must be greatly expanded (or, to speak metamathematically, be “essentially richer” than the constructive production of novelty). Furthermore, every act of creativity must at the same time comprise an ability to be evaluated as a creation if we want to be able to distinguish between something new that is interesting enough to be appraised and appreciated as such and that which we can call a “trivial novelty,” which may simply be a product of chance or an anomaly.

The latter condition in particular implies that every creatio—like all human thought incidentally—necessarily includes a moment of reflectio; that creativity itself presupposes not only a capacity for intuition or imagination, but also, as we shall see, for reflexivity. These ideas lead further to the assumption of the principal incommensurability of human reason and algorithmic rationality. Therefore even if machines can at some future point be considered to be “thinking,” it will always only be “another kind of thinking” or “something other than thinking”—an act that is best described not with cognitive vocabulary but rather as that which it obviously is: a type of formalism without a sense of the formality of formalization itself. Put another way, the process has an element of recursiveness, but not of reflexivity; yet it is the latter which is a condition for the possibility of that which we signify as thought, as cognition, knowledge or perception, but also as invention and creativity, for thinking is first and foremost always ‘thinking of thinking’, just as perception is always ‘perception of perception’. One could even go a step further: not only can machines not be creative, they are completely unable to think, just as little as they possess consciousness or semantics or pragmatics, because all of these concepts, like art, are fundamentally grounded in the social. They presuppose alterity.

II.

These deliberations begin with a text that not only made a key contribution to metamathematical explanations of calculability, decidability, and provability, but also marks one of the beginnings of the computer era itself; namely Alan Turing’s 1938 dissertation, Systems of Logic Based on Ordinals. Turing’s text was a reaction to the consequences of Kurt Gödel’s epochal incompleteness theorem of 1931, which demonstrated the impossibility of a total formalization of sufficiently complex mathematical systems containing a certain amount of arithmetic, and in so doing shook the foundations of Hilbert’s program. If there is no formal derivation for every true theorem, we are confronted with a fatal limitation of mathematical reason itself. Turing attempted to cushion this result by creating a sequence of logical systems that become increasingly “complete.” From each System L, a more complete System L’ is derived, from which L” is deduced, etc. This creates a hierarchy of levels, whereby it can of course be asked whether the end result is a complete formalization, the answer to which, as in Gödel’s theorem, is “no,” for the transition from L to L’ to L”, etc. do not allow for any strict operative constructivism. Instead, Turing speaks of an “oracle” that “cannot be a machine; with the help of the oracle we could form a new kind of machine.” Or, to come back to our original point, there is no general rule for the transition from one formal system of logic to the next, but only an intuition or a creative invention.

For Turing, “oracle” was a denotation for divination or an intuition that solves problems not by proofs, but by leaps. An “oracle machine” in Turing’s sense would therefore be an algorithm that finds a solution in one step, without running through computations. That means that mathematical solutions necessitate non-mathematical invention or creativity as an essential condition for finding new paths outside of formalized schemata. We are therefore, one could say, dealing with a system of determinist machines between which exists a non-determinist “leap” (Sprung), a transition that is itself not logically deducible, but happens abruptly, “without transition.” I have called this a ‘leap’ in order to mark its fundamental unignorable indetermination. It is also a paradox because the direction is not predetermined; a leap can only land as long as it knows nothing of how and where the transition will occur. For this reason, the only way to reconstruct a leap is aesthetically. Here aesthetics is understood as itself a praxis without rules, since there is no rule for a transition from one logical system to another—it must first be invented. Its inventio is the “event” (Ereignis), that is to say, it is not born of inferential derivation, but springs, aporetically, from “transitionless transitions.” In between are neither causalities or a teleology, but at most a crack, a caesura, over which a leap must be taken. Thus, mathematics manifests itself as a creative act that has its foundation in both mechanisms and a certain illogical spontaneity. It is based—as postulated by intuitionism, one of the most influential philosophies of mathematics, to which Gödel also more or less adhered—on both formal and logical operations as well as on a series of abductive or intuitive acts that do not follow any mathematical calculations as such. Rather, they presuppose, to speak with Kant, a “free” and “reflective judgment,” which Kant denoted “aesthetical.” “Mathematical reasoning,” Turing said analogously, “may be regarded rather schematically as the exercise of a combination of two faculties, which we may call intuition and ingenuity. The activity of the intuition consists in making spontaneous judgments, which are not the result of conscious trains of reasoning. These judgments are often, but by no means invariably, correct (leaving aside the question as to what is meant by ‘correct’).” Turing continued, “I shall not attempt to explain this idea of ‘intuition’ any more explicitly. The exercise of ingenuity in mathematics consists in aiding the intuition through suitable arrangements of propositions, and perhaps geometrical figures or drawings.

… The parts played by these two faculties differ of course from occasion to occasion, and from mathematician to mathematician.”

Put another way, the evolution of The Mathematical Experience, as Philip J. Davis and Reuben Hersh have called that unique and unparalleled ensemble of knowledge that is necessary to itself, nevertheless remains an “ensemble” that can be neither systematized from above nor standardized. Mathematics cannot be delineated with a universally valid formal language— as the failed utopia of Hilbert’s program insinuated—but is rooted in a “poetics of findings,” which makes it equal parts science and art. This poetics remains incomplete, a fragmented patchwork that does not reveal an overall pattern, but at best comes together as an open collection in which all elements are nevertheless in the right place and without alternative. From time to time, it may be expanded, or interstices can be filled, but only to reveal new gaps that in turn cannot be mastered using systematic methods. While algorithms can be specified and precise machines built that produce results in a certain and clearly defined way, there is no meta-algorithm, no mathematical machine of all machines that can generate all possible true statements or progress deductively from one system to another. While they seem to be able to deduce everything that can be represented within a system, they cannot decide whether it is representable. John von Neumann has explained accordingly, in reference to the structure of mathematical logic, “you can construct an automaton which can do anything any automaton can do, but you cannot construct an automaton which will predict the behaviour of any arbitrary automation. In other words, you can build an organ which can do anything that can be done, but you cannot build an organ which tells you whether it can be done.” And, furthermore: “The feature is just this, that you can perform within the logical type that’s involved everything that’s feasible, but the question of whether something is feasible in a type belongs to a higher logical type.”

It is thus similar to a fairy tale, in which the fairy perhaps grants our wish, but she does not tell us what we should wish. The term “poetics” is meant to capture this, for what we wish presupposes an equal share of both reflexivity and judgment. It follows that the “poetic” belongs in the realm of neither logic nor machines, but is a question of art as well as morality and our understanding of the meaning of life. Similarly, that which is calculable and that which is not cannot be subjected to further algorithmization; it shows itself, pointing toward that which proves to be “uncalculable.” Uncalculability is more than simply a negation of calculability. It denotes that which in no way conforms to the schemata of a computation—more similar to the “troublesome nowhere” than the “empty too much” where, as Rainer Maria Rilke states in his Fifth Duino Elegy, the “many-placed calculation is exactly resolved.”

III.

That is not to say that there has been any lack of attempts to nevertheless mathematize the jumps and cracks that do not want to be pinned down and to find algorithmic theories of creativity. Such theories are also interested in the possibilities of computer-generated art. If an algorithmic simulation of thought were to succeed, then the algorithmic replication of the phenomenon of creativity must also be possible. Hence, we are dealing not only with a question of algorithmic aesthetics but more importantly with the question of whether strong artificial intelligence can exist at all. Attempts to answer this question and to create a corresponding “artificial art” go as far back as the 1960s. These approaches rallied in particular against subjectivity in art, falsely ascribed to the avant-garde, countering with an “objective aesthetics” as laid out in George David Birkhoff’s quantitative aesthetic measure. Birkhoff’s simple formula, MÄ = O/C, provided the foundation; setting order (O) and complexity (C) in an inverse ratio. The more order and the less complexity an artwork exhibits, the higher its value, which in turn falls with rising complexity and a lower ordering of chaos. Birkhoff, using heuristic, statistical calculations of the amount of order and complexity, applied this formula to simplified examples such as vases, polyhedra, and the like. Artworks were not paradigmatic in this aesthetic theory, which did not stop Max Bense, an adherent of rationalism, from using a syncretic mix of semiotics and information theory to extrapolate an “exact” theory of art he termed “microaesthetics.” Working with Claude Shannon’s Mathematical Theory of Communication and Norbert Wiener’s Cybernetics, Bense transformed Birkhoff’s measure by replacing order and complexity with fictitious quantities such as “redundancy of information” (R) and “true information content” (Hi), creating his own formula for determining a supposedly more exact measure of creativity, MÄ = R/Hi.15 Redundancy, that is repeatability and thus the ability to communicate a recognizable order, has the position of the numerator and is thus the dominant measure of creativity or originality, while the true information content becomes the denominator, so that a work is better understood with increasing redundancy and becomes more opaque the more information it contains. Redundancy, as Bense explicated in “Small Abstract Aesthetics” in 1969, “means a kind of counter-concept to the concept of information … in that it does not designate the innovation value of a distribution of elements but the ballast value of this innovation, which accordingly is not new but is well known, which does not provide information but identification.”

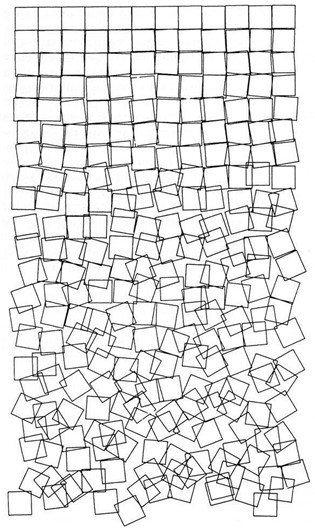

Here not only the hypothetical character of such speculations become obvious, but also the aim: to preclude an excess of creativity in order to enable digital art that has a guarantee of readability. Any art that desired acceptance would have to adhere to this normative balance. And in fact, this has been the unspoken premise of almost all mathematical descriptions of artistic processes ever since. It has also been the consistent demise of “art from the computer.” For this theory leads to the curious conclusion that an aesthetic object can only truly be appreciated when it is neither too simple nor too complex. The ideal ratio has even been set at around 37%—a number that not only divulges the rigorous restrictions of such aesthetic theories but also remains highly fictitious because there is no possibility of creating a scale via clearly defined units of measure. Such expositions are useful at best for statistical programming, such as those undertaken by Georg Nees, A. Michael Noll, and, in his early work, Frieder Nake, the “3N” computer pioneers who still believed in the revolutionary input of algorithms. Something was first made to conform to a theory of statistical probability and then the same basis was used to automatically implement and uphold the same. Nees’s “generative graphics” suffice as an example. These images, created with the Zuse Graphomat Z64, present for example groups of lines distributed across a plane, mathematically generated by defining a series of brandom numbers of points, directions, and lengths that together produce the intersecting vectors. The resulting image is a net of crossed lines, like another, similarly generated, image of well-ordered small squares whose position becomes increasingly “discombobulated.” Order transitions into chaos and chaos into orders, similar to the way in which 2-D images create

3-D effects or 3-D structures appear to be 2-D surfaces (Fig 1: Georg Nees, Squares). For Nees, the aim was to use the machine to make something not technical, but “‘useless’— geometrical patterns.” But by no means did he accept every result. If there was an overgrowth of complexity or if—for example due to computer errors—chaos erupted, he interrupted the process, so that in the end it was in fact the power of human aesthetic judgment that laid the ground rules.

In general, it would be a mistake to speak of “creative computers” in this case, because they have yet to meet the criteria for creativity, replacing it instead with randomizers. Variation shifts from invention to stochastics. Generated randomness does not imply, as in the work of John Cage or in the radical aleatoric of Kurt Schwitters, Pierre Boulez or Earle Brown, a literal rushing headlong into Nothingness. It is not a roll of the dice that privileges the event, but rather chance that has been constructed by rules or by mathematical laws, whether through the use of probability functions, or the development of a series of transcendent irrational numbers or the Monte Carlo method or some such. Instead of “negative rules” that simply create a frame for the improbable, we are dealing only with “positive rules” with a disposition to fill a previously empty field with postulates. To this end, Markov chains are usually employed, since with little information they can forecast future developments on the basis of statistical processes and their properties, thus predicting the dynamic evolution of objects and events. But there are other factors at play besides systematized chance, for example, properties of the system such as “emergence,” “mutations”, or “fitness” in an analogy to biological evolution, as well as the compression of data into patterns extracted from larger amounts of other data. Consequently, the act of creatio dwindles to the mathematical simulation of “novelty,” which can mean nothing other than the derivation of an element that was not contained within the previous information. It does not point toward the future but is rather a distillation of the past, to which is merely added a formal negation, namely the property of non containment. All future attempts to generate artificial inspiration are bound to fundamentally follow the same path, if with more refined and elaborated methods and without the crude glow of automatized magic that aims to exhibit sphinxes without secrets as truly artistic objects.

IV.

“Novelty” is hardly a meaningful measure of creativity. Something new can be empty, building only upon an existing scale of values, or it can be weak, stemming only from a combination of preexisting elements. Not even intervals are a sufficient criterium, since they can be a result of spaces, repetitions or simple permutations. “Novelty” as “novelty” thus says little. What is needed is the absolute difference of alterity that sparks the “leap” that we have linked to both reflexivity and a poetics of discovery. The concept of ‘alterity’ however intersects with theories of negative philosophy and theology such as ‘nonceptuality’, ‘nonpresentability’ or ‘indeterminacy’ and also, seen more broadly, with ‘catachresis’ in the sense of a rhetorical ‘non- figure’ or paradoxes as the limits of logical expressibility. Emmanuel Lévinas linked the category of alterity to, in particular, a space “beyond being,” to “transcendence” and the hermeneutic “break.” As an example he cited the experience of “foreignness,” the “face” and the “entrance of the other,” which make any possibility of relation impossible and is situated only in a practical ethics. “Novelty” in this case would be associated with a fundamental otherness whose absolute difference—or différance to speak with Derrida—refuses in principle to be linked back to what is known, making any determination or assertion impossible.

The concept of creativity is closely linked to these ideas. Its precondition is a radical negation and a radical difference (in the sense of différance) or alterity. It is the magic of ‘nothing’, of the ‘other than’ that cannot be pinned down and annuls an entire system of concepts, transforming its perspective. This has not stopped cognitive psychology and the branch of informatics, which is based on it from making multiple proposals as to how creativity can nevertheless be described—at the price of either a petitio principii or a serious error of categorization. Thus Margaret Boden, in her 1990 study The Creative Mind: Myths and Mechanisms, not only compiled myriad examples of the “computer art” that had already been generated at the time, including works of music, fine art, literature, and design, but she also made it clear that a ‘novelty’ was not simply a ‘curiosity’, but must also fulfil such criteria as being “unusual” or “surprising,” which alone were not sufficient if they were not also “value-laden”: “A creative idea must be useful, illuminating or challenging in some way.” Decisive for creativity is then the “sort of surprise” it has to offer—the type of disruption or transformation. Thus “novel” means both a variance of something that was already there—a development, a supplementary aspect or a change of function that awakens our interest—as well as a historical or epochal event that, like a paradigm shift, causes a shock. It is a historical caesura in existing processes or discourses, which “all at once” flips meaning and makes the “world” seem literally Other.

Tellingly, all of Boden’s examples stem from the world of natural sciences, such as Archimedes’ “eureka!” or August Kekulé’s benzene ring. Once again, the circle closes from the natural sciences to the natural science explanation of an inexplicable condition of scientific innovations. This becomes clear in the manifest circularity of the language she uses and in the characterization of ‘novelty’ using terms such as ‘new’, ‘different’, ‘for the first time’, etc. The definiens presupposes the definiendum, and that which must first be explained is simply repeated. Boden did mark the foundation of this circularity—”Creativity is seemingly a mystery, for there is something paradoxical about it, something which makes it difficult to see how it is even possible”— but her answer to this paradox is founded in the main on linking the creative act to special types of value judgments that differentiate between trivial and nontrivial novelty. Yet the characteristic “value-laden” is, as she herself admits, at best a necessary but not sufficient condition. It remains unclear what is “of value.” If attributes such as “unusual,” “surprising,” or “interesting” prove to be tautological, it is impossible to mark the difference between the “unusual” and the jolt or thrust (Stoß) that, as Martin Heidegger claims in “The Origin of the Work of Art,” is part and parcel of the shock of art: that the “extraordinary [Ungeheuer] is thrust to the surface and the long- familiar [geheuer] thrust down.”

By now we are looking at a fundamental problem that, going beyond Boden’s reflections, reveals the vital connection between creativity, evaluation, and subversion. For there can be no creative act without judgment, just as a supposedly creative object can only be recognized as creative when it receives recognition as such and gains acceptance over time.

Judgment however always occurs post festum and it attains plausibility through its defence. We will only know what had been “of value” and which novel event occurred after we have already established what has lost its novelty. Every work of art is a product of its society and is historically relative. Art does not take place in a vacuum but is situated within a framework of societal relations and cultural dialogues that first give meaning to that which is shown or exhibited. We are dealing with dynamic interactions, hence it is not enough to simply name attributes and pin down the creative process on the creation of something never-before-seen, no matter how radical. More important than any old novelty is that which is new and shares in the fundamental ‘truths’ of an epoch. In this way, the function of art is eminently epistemic: it is only art when it says something about the world and about us and our times, when it intervenes and reveals what is missing. For that reason, any discussion of art or the theory and practice of creativity is not complete without a complex description of poiēsis and technē; every purely technical understanding of the creative process falls short. It is not enough to indicate the strategies for and forms of making something “novel”— it is also necessary to discover the specific ‘leaps’ and ‘rifts’ (Risse) that make art enter into the vocabulary of its times. At the same time, judgment is necessary to situate art in the space of history and confirm its relevance. Judgment however assumes reflexivity. The most important question to ask about artificial creatio or imagination that is bound to an algorithmic rationality is less whether a creative impulse can be simulated mathematically, and more whether the production of a creative difference, in the sense of its calculability, can go hand in hand with the reassessment of its data and the mathematical modelling of its evaluation as such. I would like in contrast to posit that the calculation of this calculability cannot itself be calculable because the former creates the conditions for the latter. The reflexive ability to judge cannot be part of a mathematical modelling—rather, it precedes it.

Bense, Nees, Nake, Abraham Moles and others never put their early computer art to the test of this question. They tried out, constructed and did, and exhibited those results that passed the test of their subjective judgment, which in this case became decision-making. In the repertoire of their formal aesthetics, there was either no plan to generate evaluation or it was left to subjectivism, despite their abhorrence of the same. While they did model the creative moment as the product of certain stochastic functions—or group of measurements—nowhere did there schemata include a proper analysis or judgment of randomness as “good” or “stimulating,” much less “epistemically promising” or “valuable.” We can therefore safely say that early computer art was a failure. In a 2012 email dialogue between artists and computer scientists, Frieder Nake admitted as much with admirable openness: “When in those days, as a young guy using computers for production of aesthetic objects, I told people … about this great measuring business, someone in the audience always reacted by indicating: ‘Young man, what a hapless attempt to put into numbers a complex phenomenon that requires a living and experienced human being to judge.’ My reaction then was, oh yes, I see the difficulties, but that’s exactly what we must do! … I guess, looking back without anger, they shut up and sat down and thought to themselves, let him have his stupid idea. Soon enough he will realize how in vain the attempt is. He did realize, I am afraid to say!” Nake added, explicating: “I am skeptical about computer evaluations of aesthetics for many reasons. … Human values are different from instrumental measures. When we judge, we are always in a fundamental situation of forces contradicting each other. We should not see this as negative. It is part of the human condition.” To this we should add that the condition of conflict, contradiction, and disagreement, owing to the condition of radical difference or alterity, is the conditio of the social. A reflective judgment is based first and foremost on ‘answering’. The answer already includes the indeterminacy of the ‘to what’ or the negativity of that which is other. And it cannot be had without the ethicalness of a ‘conversion.’

V.

Currently, artificial intelligence research and development sees itself as creating new possibilities for “cutting edge” art, a “new avant-garde, which will transform society, our understanding of the world in which we live and our place in it.” Consequently, such production of “creativity” believes it has transcended the aporias of the problem of judgment. While early computer art was focused only on random processes and their dynamics, the AI of the present pays homage to the “power” of “big data” and the “connectionism” of “neural networks,” as well as the consequent application of statistical and structural methods in the context of “supervised” or “unsupervised” machine learning. Its highest credo is learning, which is seen to include its own evaluation as the measure of success. The principle possibility of the mechanization of learning processes was already postulated in the 1960s by Turing, Wiener, and von Neumann. Today however, the artificial creativity “task force” has completed the qualitative leap—as members of Google’s Magenta project such as Mike Tyka, Ian Goodfellow, and Blaise Agüera y Arcas attest—by enabling the kindling of creative algorithmics that include judgment. “I’m a computationalist and I believe the brain is a computer, so obviously a computer can be creative,” they say, or “machines are already creative,” and “When we do art with machines I don’t think there is a very strict boundary between what is human and what is machine.”

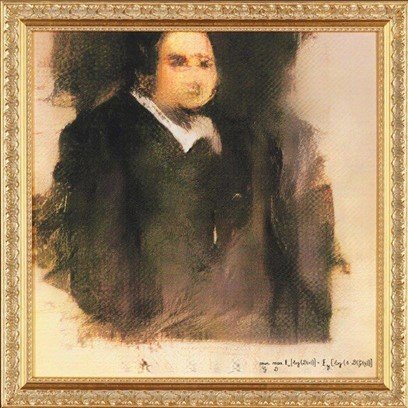

At the same time, there is nothing truly novel about the technical conditions or mathematical methods used. At most it is the way large amounts of data are used and the credo of “recognizing patterns.” Neural networks are not fundamentally more powerful than Turing machines and the formal operativity of computing, despite massive parallel circuits, is not fundamentally different from von Neumann architecture. Nevertheless the black box—the gap between input and output, the opacity of the algorithmic and the mystery of its performance—has become larger, feeding the myth of the “inspired machine.” Rather than focusing on simple sets, the functional structures used now deal with hidden structures, statistical probabilities, mutation indices, frequency distribution, gradients, etc. Applied to the production of “art,” this certainly makes for some “interesting” or “surprising” results, but these are isolated occurrences with no feeling for a global narrative, much less for the irritations and tragedies of human experience. This is best demonstrated by the “inception” of the neural network nightmares produced by Alexander Mordvintsev and Mike Tyka’s DeepDream or by Ahmed Elgammal’s and Ian Goodfellow’s Creative or Generative Adversarial Networks (GANs), which brought forth such hyped examples as the portrait of “Edmond Belamy” by Obvious, a group of French computer scientists. All of the above examples are typically the results of composites. Belamy for example came from “training” a neural network on 15,000 portraits by artists from the 14th to 20th century compiled in the WikiArt dataset. The supposedly spectacular result was auctioned by Christie’s in New York for the sum of $432,000. (Fig. 2, 3: Obvious: Portrait of Edmond Belamy, from La Famille de Belamy) The process, which

is similar for the creation of images, poems, or musical improvisations by AI, is based in principle on the compilation of hundreds of thousands of details, word orders, or compositional fragments within “samples” whose content can

be clearly classified. Integral to this process is thus the prejudices of a nominalist semiotics. It assumes that artworks consistently obey the dictates of representational depiction and certain objects, people, faces or animals can be seen in them, or that poetry is about content that can be stated or that music is made of easily recognizable sequences of melodies and rhythms. The same holds true for architectural and other designs as well as screenplays or TV series: their basis consists of analyzable elements such as idiosyncrasies of style, typical colors, certain phraseologies, or catalogable figures of compositions from Vivaldi, Bach, Mozart or modern pop music. The list alone reveals the flattening power of this process. We are confronted with elements that can be made discrete and refer to nothing but clichés. The deadening of the aesthetic process that Theodor W. Adorno ascribed to the stereotypical character of early 20th century film music holds all the more true for the “artificial art” of artificial intelligence. While its foundation seems to rest upon human creations, these are no more than a catalogue of labeled signs, reduced to that which can principally be expressed in numbers and mathematical functions. Nowhere is it about that which is “artistic” in “art” but only about the possibility of identification, availability, and translatability into data, as well as their connection and calculation with the goal of transforming them into other data.

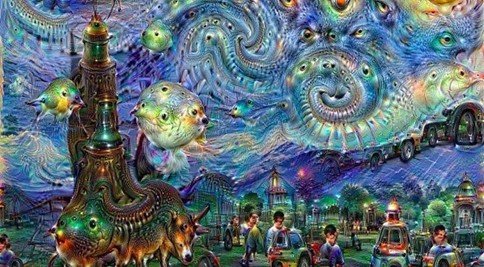

Inceptionalism, as Alexander Mordvintsev and Mike Tyka initially dubbed their nominally new and avant-garde style, can be seen as paradigmatic for such “art.” Neural networks always function the same way, whether they are recognizing things, people, emotions, traffic, or art: a large number of hidden “layers” are interconnected and provided with a large amount of statistical and standardized input. Depending on the output, the evaluation of which is either “supervised” and judged by human beings or “unsupervised” and assessed by other, similarly constructed, machines, certain paths are strengthened, analogous to neural pathways in the brain. But this machine process is limited to only those elements that can be transferred mathematically into geometrical symmetries, algebraic homomorphisms, matrices, etc. and whose patterns therefore can be recursively transformed into other patterns. This sets specific conditions for the input. It must be possible, for example, to label data sets to which a primitive semiotics must be applicable, whether the representation of simple things or falling or rising tone series or the like—as long as they can be transferred into discrete numerical values. These are then input into the layer L1 where patterns are recognized in the numerical data which then become

the input data for the layer L2 which in turn detects patterns, etc. (Fig. 4: Inceptionalism). The result is a readable output which, depending on the representational logic that served as a foundation, statistically confirms or does not confirm to what is shown, meaning the output can (or cannot) be assigned to a functional category. Remarkably, when the image of ‘object-x’ is input, the output “recognizes” this object at a percentage of y, whereby one cannot truly speak of ‘recognition’, but at best of the reproduction of a name. The principle thus describes a mechanical form of discrimination based on probability. It is not comprised of “seeing” something—in humans, the concept of sight always assumes “seeing seeing”—but of “sensing” discrete structures or diagrams and assigning to them an approximate value: 96% person x, 51% y-emotion, 85% painting by z, 68% in the style of k.

Thus while the process is at first merely an artificial intelligence of identification—in the sense of detecting the ‘thing’, ‘face’, ’emotion’, or ‘art’— this process can be changed from reproductive to “creative” when, as Mordvintsev and Tyka have proven, it is stopped in the “middle” and turned back on itself to, contrary to its initial purpose, repeatedly act upon itself. Its output is in that case not a percentage of identifiable objects that can or cannot verify “tags,” but rather strange compositions that do in fact fulfil Boden’s minimal criteria for creativity; namely that they are “surprising,” or “unusual.” “Mordvintsev’s adventure …,” Arthur I Miller contested, “was to transform completely our conception of what computer were capable of. His great idea was to let them off the leash, see what happened when they were given a little freedom,” And further: “Mordvintsev’s great idea was to keep the strength of the connections between the neurons fixed and let the image change,” until nightmarish figures appeared—uncanny hallucinatory hybrid

creatures similar to the drawings of schizophrenics (Fig. 5: Inceptionalism). Mordvintsev and Mike Tyka coined this stylistic presence “Inceptionalismus” in order to address its new beginning. One can of course celebrate these “works” as a new generation of AI-assisted digital art. Or one can call them what they are: psychedelic kitsch.

The production of the now famous Portrait of Edmond Belamy, from La Famille de Belamy (2018) followed, mutatis mutandis, a similar path. Belamy is a computer-generated painting “signed” by the central component of its algorithm code and inscribed in a fictive historical series of family portraits. Created in the style of eighteenth-century paintings, it occupies, as it were, the future spot in the ancestral portrait gallery (fig. 3). One version was extracted from eleven possible portraits, all potential variants of the non- existent “Edmond Belamy” (fig. 4); the painting’s ironic title is a homage to Ian Goodfellow, the pioneer and inventor of generative adversarial networks (GAN) on which the image generation was based. While journalists have claimed that the portrait is “aesthetically and conceptionally rich enough to hold the attention of the art world,” the members of the group Obvious, Hugo Caselles-Dupré, Pierre Fautrel, and Gauthier Vernier, say their concept was aimed rather at establishing “GANism” as a legitimate art movement. They aimed to make non-human aesthetics acceptable, putting it on the same level as human aesthetics in line with their motto, “Creativity isn’t just for humans.” The production principle is based on two neural networks that compete with one another: a “generator” and a “discriminator.” Both are fed with the same stuff, but they have different tasks. While the generator attempts to create a portrait that fulfills the criteria, the discriminator determines whether it is “typically human” or should be junked. “Creation” and “critique” alternate, but the anthropomorphic denotations should not distract from the fact that the operation at the foundation of both the generator and the discriminator consists simply of recognizing patterns. It is still no more than statistics; “judgment” is not based on criteria, but solely on the basis of supervised machine learning processes, which in turn have their base in human choice.

VI.

The discriminator, however, is not an evaluator—that role is reserved for those who make the final choice and from many possible results choose those that seem to be most “interesting.” Machine learning neural networks therefore only seem to integrate the problem of judgment. In fact, not even “unsupervised” machine learning, in which only machines are involved following a games theory model, can be said to judge—discriminating is not the same as judging. Furthermore, during the production process, all available material fed to the “art machines” stems from artistic traditions. Thus it is elements of human history that provide the foundation for determining which characteristic elements can be used to make “new” works. The machine’s source is humankind’s art, whose total is expanded using probability functions so that the machines “learn” to carry into the future what has already been done in the past. So while the main motor of early digital art as programmed by Nees, Moles, and Bense used randomizers for creativity, today’s elaborate AI models are essentially founded in processes of plagiary that do the same thing as their predecessors: simulate mathematical contingency to arbitrarily increase the amount of aesthetic works. The main constructive principle is mimicry, except that the core of the source of creativity and the event of alterity that it needs, as well as the passible receptivity of human inspiration, is replaced by the simple mechanism of mathematical variability—whether in the form of statistics, the use of Markov chains, the modelling of evolutionary mutations or even the “strange attractors” of chaos theory. They imitate that which should be considered “uncalculable.” “Neural networks are designed to mimic the brain,“ Arthur I Miller wrote; accordingly, AI “creativity” manifests as a form of innovation that mimes creativity. It would be a categorical mistake, in the literal sense, to confuse it with human creativity. Instead it generates an at best pale imitation or an impulse to believe in (in the sense of Kendall Walton’s concept of make-believe) similarities—just as Turing’s imitation game once led people into the trap of “competing” with a computer. Optimization strategies in artificial intelligence research have almost all been concentrated solely on conceiving of machines as analogies of humans. They then confront us with seemingly convincing “doppelgängers” that are in fact nothing other than a repetition of ourselves.

Another method of creating such pseudo-analogies derives from the theory of complexity (Fig. 6: Casey Reas: Generative Art). Here, non-linear

systems come into play, which exhibit indeterminate and unpredictable or even uncontrollable outcomes. Every result consists of a non-reproducible deviation resulting not from random values, but from emergent processes. They differ from both performances and from Gilles Deleuze’s and Jacques Derrida’s ‘play’ of repetition and difference because they indicate singularities rather than fluctuations and the occurrence of non-calculable effects. Second-generation concepts of “generative art” exploited such effects by using “evolutionary algorithms” that seem to create images, music- pieces or structures that proliferate on their own. We are thus confronted with forms that seem to grow and are as symmetrical/non-symmetrical as natural phenomena even though they derive from rules—as if we were observing filamentary organisms or accreting bacterial cultures in petri dishes. Here, “art” resembles artificial fauna and flora, an ecosystem programmed by designers who allow ornaments and crystalline patterns to unfold synthetically as if they were digital creatures that begin to dance according to their own inner rhythms. This is all the more true of AIVA, an AI-supported ‘composition machine’ that has learned from, primarily, pop music, using the same method, in order to perpetuate it endlessly.51 They are conspicuously similar on the visual as well as the acoustic level, in that the productions compensate for their aesthetic poverty with an ongoing serialism. “Artificial Art” is first and foremost industry; its results suffer from the impregnated texture of the market. Hereby It becomes critical to be able to make distinctions. That which may look like an unforeseeable event or an indetermination is as formal as the underlying code. Radical indeterminacy, in contrast, denotes a negative concept outside of every category, while generative creations derive from statistics. Not revolution is inherent in its artifacts, but optimization, transcription, and development through mutation, which can never be anything but perpetuation and variance.

It is for this reason that huge amounts of data are brought into play, as well as complex neural networks with thousands upon thousands of layers whose massive numbers are meant to mimic the neural structure of the brain, which, it is claimed, functions just as “digitally” as computer systems. Warren McCulloch and Walter Pitts famously postulated this homology as early as 1943 in “A Logical Calculus of the Ideas Immanent in Nervous Activity,” leading von Neumann, despite his skepticism, to assume he was dealing with an empirical fact. However in the end, how the human brain information about the size of functions is no more than a debatable observation dictated by the scientific regime of the times, in this case one that supposed an equivalence between two seemingly binary structures; namely a Turing machine and the “firing” or “not firing” of synapses between nerve cells. But this approach remains reductionist; it not only reduces brain activity to the transmission of signals, ignoring the role of glia cells, the constant dynamic balance between excitation and inhibition, and even the plasticity of the human brain, but also individualize the process of thought and couples it to one privileged organ. The fact that people are social creatures who need others to create “meanings” or that both interpersonal relationships and those between people and “worlds” are necessary for there to be anything like thoughts that can be shared—or creative inventions—none of that is mentioned. The lone principle is a monistic materialism bent on finding superficial relationships whose simulacra seems sufficient to substitute one world—human reality and its conditions—with another: the world of formalized automatons. In this process, the mathematical principle becomes usurpative; not only has it been subjugating the description of reality since the early modern era, but now it is also taking on the genesis of every single thought. However, this occupation brings forth an aporia, as Hillary Putnam has shown in her exploration of the “brain in a vat” sci-fi thought experiment. An isolated brain would not be able to judge its own system of reference and thus create consistent criteria for truth, reality, and meaning. Since they are linked neither to a history or to the outside world, test-tube brains, androids, and robots would not be able to decide where they are (in a vat or in a body or somewhere else) or whether or not their ideas about themselves were true or false. Therefore algorithms are not able to represent more than humanoid similarities that are unable to either best us or fool us. They are simply different: the human imagination and the ingenuity of the mind remain incommensurable in comparison with computational innovation-machines. The only possible outcome of a comparison of human and computer is to ascertain that they are incomparable. This can be seen best in the “artificial art” churned out by computers. Mordvintsev and Tyka’s Inceptionalism, for instance, reiterates existing aesthetic styles by distorting them, for example randomly mixing various styles. In this their models are preserved, not only because they use readily accessible photos, paintings, or musical compositions as their templates, but also because their morphological experiments are in the end meant for human eyes and ears and their preferences. Like slaves, programs fulfil their programmers wish to regurgitate that which is “pleasing” and generally accessible, that is to say, it is in line with their taste. That is the reason these works draw from pop culture. They reproduce that which is well-known. At the same time, the aesthetics of representation, especially monsters, is celebrated as the return to paradise. The images of the “Inceptionalists” are trivial and exhibit a clear preference for bizarre creatures that, like Belamy, profess in vain to be original. The same can be said of Leon Gaty’s Style Transfer, Jun-Yan Zhu’s CycleGANs, or Mario Klingemann’s “Transhancements,” whose

“neurographical” creatures look like they have jumped out of blurry Francis Bacon paintings (Fig 7. Mario Klingemann, Cat Man).

VII.

It would be wrong to say that human creativity also only repeats what went before it, that it does not bring forth but only transforms or, as Nelson Goodman said succinctly, “the making is a remaking.” Certainly, the imagination too, as Kant also knew, can only imagine that which it has already perceived. And yet that is only half true. For every design, every positing, also at the same time articulates something unimaginable, something foreign or utopian. Inventions are more than just metamorphoses. They also always include a moment of negativity, something unsubsumable, at least as regards the inexplicability of the ground of the constructive principles of the construction in the construction itself. Transgression is an immanent part of all human transformation, because the transformative act veils itself in the transformed. Artificial “creativity” in contrast is based in rules, in statistical probabilities or mathematical contingencies derived in principle from a network of formal interrelations and paths, no matter how hidden their ground or rulebook. While the computations may seem like free associations, in the end, despite the system’s black box, they remain algorithms, even if no formula can be given. In contrast, the ‘leap’ of the différance, the difference and deferral, or the “alter” in “iter” as Derrida has put it, is of another dimension and quality because it necessitates the sudden appearance of alterity, of the event or a temporally determined and unrepeatable moment in order to manifest.

Thus “artificial creativity” can at best be described as the performance of an appearance (Schein), a looking-like whose superficial visual and acoustic spectacle proves its inherent poverty. Its telos is masking or the masquerade, no longer to be recognized as such by its human recipients, so that artificial intelligence first comes into itself in, to speak with Hegel, a “deep fake.” In the era of “post-truth” and “alternative facts,” the morphed doll face can no longer be distinguished from the “real” one. Examples of this are found in innumerable manipulated images, computer generated journalism, the continuations of Johann Sebastian Bach’s chorales, preludes by David Cope’s program Emily Howell (EMI) or in the recently announced performance of Beethoven’s “new” tenth symphony, interpolated by an AI from rare notes found in his estate. The scandal is not the fact of a “faked” Beethoven, but the desire for the same, the belief in the possibility of his reincarnation, as if there were no death, no ending, and no discontinuation of his creative energy. And in fact, online comments are rife with emotion and unreserved admiration. All that machines can currently produce is an “as-if,” an illusion that we, on the receiving side, are more than willing to be deceived by. Here we fall prey to the same systematic error found in the Turing test— whether passed or failed—which fails to inform us about the difference between human and artificial intelligence beyond the undecidability between different types of representation. Turing’s “error” was primarily that he built too many adjustable premises into the setting of his test; not only the oft- criticized biased concentration on written and therefore putative semiotic communication, but also a prejudice towards a logics of decision-making that uses the same mathematical model for the question of possible “machine” intelligence as for its answer, as well as for the process by which it is verified. Thus, the structure of its judgment is circular. Just as Turing’s argumentation runs in circles, so do the many attempts to send people down the wrong track by asking them to decide for example which prints are computer-generated and which taken by a photographer, or which novel is the product of an algorithm and which stems from the hand of a human author. Such tests are senseless because they are created to systematically fool people, because it is not the superficial lack of difference that decides between art and non-art, but rather the singularity of an experience and its historical meaning, which can only be guaranteed by the mandate of authorship.

Why then have “art from the computer” at all? What is the attraction of constructed creativity, the will to the machine’s triumph? The argument that, alongside artificial simulation, aspects of “thought” are invoked that seem most human, amounts to no more than positing that we learn to understand better through the creation of “artificial equivalents” than through anachronistic philosophical theories. But that argument holds only if philosophy has already ceded to the natural and technological sciences. What speaks against it is the immanent incommensurability of human vs machine production and creativity. The machine has the irrefutable advantage of speed, mass production, and the métier of calculation. Therefore the true argument cannot be anthropological when the constructivity of computations and robotics is used to understand the nature of the human, but only an economic argument of acceleration. What is creative about artificial intelligence is not AI itself, but its ability to generate a lavish abundance of potential images, writings, and musical composition, because machines always deal with series, overproduction, and the “more-than.” In milliseconds, its functions can churn out more “works” than human artists, poets, or composers in a lifetime. For this reason, the milieu of digital art is not concentration, but excess. That is an exact reversal of how the traditional arts work, which are interesting not because of their creative style or mannerism, but for their cultural impact. The “works” by machines produce no more than uninspired excess, an overproduction of commodities whose only draw is an insipid fascination in the fact that they were supposedly made without the help of people. This is a revamping of the myth of the Acheiropoieta, and contains as little truth today as it did then, because the site of creativity is not the impenetrable depths of neural networks, but the design of algorithms and their implementation inside their structures.

VIII.

Art however can never be the result of mass fabrication, certainly not at the speed of algorithmic exorbitances. Rather it points continuously in new and different ways to the abyss at the center of apparitions, to their precarity and fragility, confronting us again and again with the unfulfillability of our existence and the terror of its finiteness. This encounter is only possible through sustained reflexivity. Art always refers back to us. It is not from machines for machines, but for people, and neither the décor, the promise of immersion nor the multiplicity of unheard-of beauties or a never-before-seen is the source of its striving. Rather art longs to discover the traces of that which does not appear in appearance, of the labyrinth of nothingness in being. At the same time, it confronts us with a moment of transcendence. This is the spiritual foundation of art. Aesthetics does not exhaust itself in phenomenality—that would lead to the ideology of aestheticism—its epistemic force is found rather in holding us in a relationship with the inaccessible, with the borders to the world and to death, while also challenging us to take a stand—in our lives and in our socio-political contexts.

The artificial creatures from the computer in contrast never reach

these heights of aesthetics (Fig. 7: Inceptionalism). Certainly, there are artists who see themselves as engineers—in this case however, software engineers pose as artists and, instead of thinking aesthetically, exact technical solutions. Solutions that suffer from naïve concepts of both art and creativity. These are founded on neither theories of aesthetics nor theories of art history, but solely on the computer sciences, the neurosciences, and cognitive psychologies. One could say they operate tautologically in their own disciplinary fields. “Thinking” appears to be just another kind of computation, “learning” becomes a behaviorist and cybernetic feedback loop, and “making art,” a game. That goes hand in hand with simplified concepts that either stay at the level of eighteenth- and nineteenth-century aesthetic categories, or indulge in a radically relativist idea of art. In the first case, AI researchers refer to entries from the lexica of English Utilitarianism or perhaps Kant to underline criteria such as, in the main, “joy,” “pleasure,” or other aesthetic feelings. Jon McCormack and Mark d’Inverno also name “beauty” and “emotional power.” These astonishing anachronisms not only regurgitate ideas that were swept away by the artistic avant-garde at the beginning of the twentieth century, they exhibit a glaring lack of understanding of the manifest epistemic power of the arts.

In the case of the second group, one finds a vulgarization of the aesthetics of reception rampant in the 1980s and ’90s, according to which art is first made in the eye of the beholder, or the ear and judgment of the reader or audience. “Everybody has their own definition of a work of art. If people find it charged and inspiring then it is,” Richard Lloyd of Christie’s is cited by Arthur I. Miller as saying. Or Mike Tyka, also cited by Miller: “To me, art is judged by the reactions people have.” These naïve statements, whose explicit subjectivism can hardly be surpassed, are de facto resigned to their inability to find an adequate concept of art, subsequently pushing for what Adorno has called the Entkunstung der Kunst or “deaestheticization of art.” Or, as he says in the opening passage of Aesthetic Theory, “It is self-evident that nothing concerning art is self-evident anymore, not its inner life, not its relation to the world, not even its right to exist.” But the gesture of abstention implies the opposite of Adorno’s skeptical adage; rather, with a somewhat forced democratic verve, it bows down to the taste of the masses. To understand where this may lead, one need only imagine the natural sciences took a similar approach. Forced to agree with majorities, they would give in to the pull of arbitrary theories regardless of their truth-content and would risk becoming trivial. Every call for general proof or evidence would be denounced as elitism. But democratization has as little place in the sciences as voting averages are necessary for claims of validity. Science is not the arena of political majorities, the only thing that counts are the more convincing methods, the sounder evidence, and the better arguments. Analogously, debates on the democratization of the arts often lead to an aesthetics most interested in pleasing the largest number of people—at the expense of what is actually artistic about art.

It should also be noted that it is no coincidence that the art critics most likely to take this position are the most market-oriented, and that it is the representatives of large auction houses or google software engineers who are most likely to parrot them. Their relativism is neither anti-normative nor critical of institutions, it is simply geared toward a specific purpose. It reneges on making a judgment, leaving that up to machines that are, however, unable to do so. “As should be obvious …, computational aesthetic evaluation is a very difficult and fundamentally unsolved problem,” Philip Galanter summarizes in his overview of the field, Computational Aesthetic Evaluation: Past and Future—and to this day there is no evidence that it will ever be solved. But, any understanding of art that lacks the power of judgment must remain without history, politics, or aesthetics. Thus, that which can be realized via machine learning is linked neither to sociality nor to zeitgeist, but acts as contextless, arelational, and “ab-solut” as mathematics. No “artificial art” ever struggles with accepting only the “gift” of materiality, or with the limitation of freedom in compositions, or the dialectic of feasible forms and unfeasible materials, not to mention the alienation of an era or the distortions of the social and its environmental order. The designs they let loose refer to nothing more than the parameters of their own technical conditions; they contain neither social engagement nor historical impact, nor do they intervene in their environment: they are what molds the machines. Art in contrast is constantly intervening; it is its own kind of thinking, rooted in ambiguity, contradictions, and catachresis. Not only is art, as Arthur Danto suggests, “about something,” it is first and foremost about itself, for there is no art that does not deal with itself, ´with its own concept, purposes, era, and utopian potential. Always involved with itself, with its own mediality and praxis, it transgresses and transforms these to also change itself. Art acts with itself, against itself, and beyond itself to shift its place, its dispositif, and its conditions. Art is therefore first and foremost a practice of reflexivity. It is complete in the “critique” of itself, if we understand critique to mean a delimiting that continually questions place, potential, purpose, and surrender. By invoking its own end it continually transcends itself and makes something Other of itself. Art is never static, but always erratic, volatile, and transcendent. That is why Kazimir Malevich, to name just one example, understood the ‘image’ of an image as “the icon of our times”; simultaneously the apex and the end of all iconicity. The empty form or obliteration, to which he gave the ascetic name “square” robs the image of all figurality and yet remains an image (Black Square, 1915). The art of the square is in this sense “art about art” which attempt to both finish and begin an artistic era in equal measure. For a machine, such eccentricity would be pointless. Its principle of production does not allow for negativity.

Early twentieth-century avant-gardism insisted on the indefinability of art and the arbitrariness of the word. Had it not done so, it could not have newly ignited the angry protests and conflicting discourses and debates on what art is, what art can be, and what not. Digital art in contrast is exclusively ludic; it is most similar to an experimental setting, a l’art pour l’art, or a non- committal game whose toys are neural networks and their machine learning programs. But their results elicit only responses such as “exciting” or “never seen before” as if this said something substantial about art. In the meanwhile, it has a degressive effect. The ever-accelerating slew of computer-generated images, stories, music, and films that are beginning to glut the market both devalues art and makes the processes of pop culture ubiquitous. Alongside the increasing rates of production and repetitions, the complexity of the results is continually sinking.

The bar has also been lowered for the concept of art, whether or not it is understood aesthetically. To confuse creativity with novelty restricts the code. Creativity, and artistic practice, has reflexivity as its terminal. The act of ingenuity, the sharp-witted intuition of the creative impulse, is founded in the main in discovery or in undiscovered cognitive inhibitions or hindrances that must be overcome to make room for other thoughts. What is decisive is not their novelty, but the act of opening. This however presumes a ‘thinking thinking’ that does not end in recursivity, but is always triply terminated as: thinking the object (what), thinking the act (that), and thinking the mode of thought (how) or the met’hodos and its medium. The interaction and triangulation of these three and the constant shifting and mixing of levels cannot be reconstructed in the algorithmic mode. Where thinking breaks with its own experience, where it abandons its opacity and cooptation in an inherent dogma, logic and mathematics are at a loss. What is needed is that ‘leap out’ that necessitates the audacity of “alternative thinking” and that cannot be reproduced with the same objects in the same modus and the same performance of the act. That is why the paradox has such an important place in the creative moment. It begins where we are at a dead end, where we are confronted with unsolvability (the insolubiles of Medieval logic), with the desperation of the je ne sais quoi or where we struggle with the inadequacy of the usual methods and, simultaneously, are unable to continue to travel well-trodden paths. Then all that remains is a thinking that hangs in the balance, in the middle, the tertium datur. “Open Sesame—I want to get out,” is the first aphorism in Stanisław Jerzy Lec’s Unkempt Thoughts. The creative leap seeks an opening, not admission. It searches for the exit, not the entrance. It cannot be taken with probability functions nor can it be stumbled upon by chance. It comes always and only in the blink of an eye, in a moment of improbability, of a rigorous undecidability that overrides all logics of decision, calculability, and simulation.

Translated from German by Laura Radosh

Literature

Adorno, Theodor W. Aesthetic Theory. Translated by Robert Hullot-Kentor. New York: Continuum, 1997.

Adorno, Theodor W. and Hanns Eisler. Composing for the Film. Oxford: Athlone, 1994.

Beck, Martin. “Konstruktion und Entäußerung. Zur Logik der Bildlichkeit bei Kant und Hegel.” PhD dissertation, Freie Universität Berlin, 2018.

Bense, Max. “Einführung in die informationstheoretische Ästhetik.” In

Ausgewählte Schriften Bd. 3., 255-336. Stuttgart: Metzler-Verlag, 1998.

Bense, Max, “Small Abstract Aesthetics.” In A Companion to Digital Art, edited by Christiane Paul, 249-264. West Sussex: Wiley, 2016.

Boden, Margaret. The Creative Mind. Myths and Mechanisms, 2nd ed. London: Routledge 2004.

Broussard, Meredith. Artificial Unintelligence. Cambridge: MIT Press, 2018.

Danto, Arthur. The Transfiguration of the Commonplace. Cambridge: Harvard University Press, 1981.

Davidson, Donald. “Turing’s Test.” In Problems of Rationality, 77-86. Oxford: Clarendon Press, 2010.

Davis, Philip J. and Reuben Hersh. The Mathematical Experience. Boston: Harvard Press, 1981.

Derrida, Jacques. “Différance.” In Margins of Philosophy, translated by Alan Bass, 3-27. Chicago: University of Chicago Press, 1982.

Derrida, Jacques, “Signature Event Context.” In Limited Inc., translated by Samuel Weber, 1-24. Evanston: Northwestern University Press, 1988.

Dyson, George. Turing’s Cathedrals. The Origins of the Digital Universe. New York: Vintage, 2012.

Elgammal, Ahmed. “What the Art World Is Failing to Grasp about Christie’s AI Portrait Coup.” Accessed April 6, 2020.

https://www.artsy.net/article/artsy-editorial-art-failing-grasp-christies-ai- portrait-coup.

Galanter, Philipp. “Computational Aesthetic Evaluation: Past and Future.” In Computers and Creativity Edited by Jon McCormack and Mark d’Inverno, 255-293. Berlin: Springer 2012.

Goodman, Nelson. Ways of Worldmaking. Indianapolis: Hackett Publishing Company, 1978.

Heidegger, Martin, “The Origin of the Work of Art.” In Off the Beaten Track, edited and translated by Julian Young and Kenneth Haynes, 1-56. Cambridge: Cambridge University Press, 2002.

Horkheimer, Max. Critique of Instrumental Reason. Translated by Matthew O’Connell. London: Verso, 2012.

Kant, Immanuel. Critique of Pure Reason. Translated and edited by Paul Guyer and Allen Wood. Cambridge: Cambridge University Press, 1998.

Kant, Immanuel. Critique of Judgment. Translated by Werner S. Pluhar. Indianapolis: Hackett Publishing Company, 1987.

Kleene, Stephen Cole. Introduction to Metamathematics. Groningen: Wolters Noordhof Publishing, 1971.

Lec, Stanisław Jerzy. Unkempt Thoughts. Translated by Jacek Galazka. New York: St. Martin’s Press, 1962.

Lévinas, Emmanuel. Otherwise than Being or Beyond Essence. Translated by Alphonso Lingis. Dordrecht: Kluwer Academic Publishers, 1991.

McCormack, Jon and Mark d’Inverno (eds.). Computers and Creativity.

Berlin: Springer, 2012.

McCormark, Jon and Mark d’Inverno. “Heroic versus Collaborative AI for theArts.” Accessed April 8, 2019.

https://www.ijcai.org/Proceedings/15/Papers/345.pdf.

McCulloch, Warren and Walter Pitts. “Logical Calculus of the Ideas Immanent in Nervous Activity” (1943). Accessed May 18,2020. http://www.cse.chalmers.se/~coquand/AUTOMATA/mcp.pdf.

Mersch, Dieter. “Ästhetisches Denken. Kunst als theoria.” In Ästhetische Theorie. Edited by Dieter Mersch, Sylvia Sasse, and Sandro Zanetti, 241-260. Zürich: Diaphanes, 2019.

Mersch, Dieter. “Benses existenzieller Rationalismus. Philosophie, Semiotik und exakte Ästhetik.” In Max Bense. Weltprogrammierung. Edited by Elke Uhl and Claus Zittel, 61-81. Stuttgart: Metzler Verlag 2018.

Mersch, Dieter. “Digital Lifes. Überlegungen zu den Grenzen algorithmischer Rationalisierung.” In Augmentierte und virtuelle Wirklichkeiten. Edited by Andreas Beinsteiner et al, 53-76. Innsbruck: Universitäts-Verlag, 2020.

Mersch, Dieter. “Ideen zu einer ‚Kritik algorithmischer Rationalität‘.”

Deutsche Zeitschrift für Philosophie 67, no. 5 (2019): 851-873

Mersch, Dieter. “Kreativität und Künstliche Intelligenz. Einige Bemerkungen zu einer Kritik algorithmischer Rationalität.” Zeitschrift für Medienwissenschaft 2 (2019): 65-74.

Mersch, Dieter. “Positive und negative Regeln. Zur Ambivalenz regulierter Imaginationen.” In Archipele des Imaginären. Edited by Jörg Huber, Gesa Ziemer, and Simon Zumsteg, 109-123. Zurich: Edition Voldemeer, 2009.

Mersch, Dieter. “Sprung in eine neue Reflexionsebene.” In Ressource Kreativität. Kunstforum International, vol. 250. Edited by P. Bianci, 136- 149, 2017.

Mersch, Dieter. “Turing-Test oder das ‚Fleisch’ der Maschine.” In Körper des Denkens. Edited by Lorenz Engell, Frank Hartmann, Christiane Voss, 9-28. Munich: Fink-Verlag 2013.

Mersch, Dieter. Epistemologies of Aesthetics. Translated by Laura Radosh. Zurich: Diaphanes, 2015.

Mersch, Dieter. Ereignis und Aura. Untersuchungen zu einer Ästhetik des Performativen. Frankfurt/M: Suhrkamp, 2002.

Mersch, Dieter. Was sich zeigt. Materialität, Präsenz, Ereignis. Munich: Fink-Verlag 2002.

Miller, Arthur I., The Artist in the Machine. The World of AI-Powered Creativity. Cambridge: MIT Press, 2019.

Moor, James H., ed. The Turing Test. The Elusive Standard of Artificial Intelligence. Dordrecht: Kluver Academic Publishers, 2003.

Nake, Frieder, “Computer Art: Where’s the Art?.” In Bilder Images Digital. Computerkünstler in Deutschland 1986, 69–73. Munich: Barke-Verlag, 1986.

Nees, Georg. “Artificial Art and Artificial Intelligence.” in Bilder Images Digital, 58–67. Munich: Barke-Verlag, 1986.

Nees, Georg. The Great Temptation, exhibition at ZKM, 2006. Accessed April 29, 2020. https://zkm.de/en/event/2006/08/georg-nees-the-great- temptation.

Nees, Georg. Generative Computergraphik. Munich: Barke Verlag, 1969. Néret, Gilles. Malewitsch. Cologne: Taschen-Verlag, 2003.

Noë, Alva. Out of Our Heads. Why You Are Not Your Brain and Other Lessons From the Biology of Consciousness. New York, Hill & Wang, 2010.

Putnam, Hillary. Reason, Truth and History. Cambridge: Cambridge University Press, 1981.

Rilke, Rainer Maria. The Poetry of Rainer Maria Rilke. Translated by Anthony S. Kline. BOD: Poetry in Translation, 2001.

Schmidhuber, Jürgen. “A Formal Theory of Creativity to Model the Creation of Art.” In Computers and Creativity. Edited by Jon McCormack and Mark d’Inverno, 323-337. Berlin: Springer, 2012.

Turing, Alan M. “Computing Machinery and Intelligence.” Mind 50 (1950): 433-460.

Turing, Alan M. “Systems of Logic based on Ordinals.” Proceedings of the London Mathematical Society 2. vol. 45 (1939). Accessed April 12, 2020. https://www.dcc.fc.up.pt/~acm/turing-phd.pdf.

Von Neumann, John. The Computer and the Brain. New Haven: Yale University Press, 1958.

Von Neumann, John. Theory of Self-Reproducing Automata. Edited and completed by Arthur W. Banks. Champaign: University of Illinois Press, 1966.

Dieter Mersch

Dieter Mersch is Professor for Philosophical Aesthetic at the Zurich University of the Arts (ZHdK). He studied mathematics and philosophy at the Universities of Cologne and Bochum and got his PhD in philosophy at the Technical University Darmstadt. From 2000 to 2003 he was Guest-professor for Philosophy of Arts and Aesthetics at the School of Arts in Kiel, from 2004 to 2013 full professor for Media Theory and Media Studies at the University of Potsdam, in 2006 Guest-professor at the University of Chicago, 2010 Fellow at the IKKM in Weimar, 2012 Fellow at the ZHdK Zurich, Switzerland, in 2013 Guest-professor at the State University Sao Paulo, Brasil. He is also Chair of the DFG Research Training Centre «Visibility and Visualisation – Hybrid Forms of Pictorial Knowledge» since 2010 and Member of the Editorial Board of the Annual Book for Media Philosophy (Jahrbuch für Medienphilosophie) and the Publication Series Metabasis (Trancript, Bielefeld, Germany) and DIGAREC Series, Potsdam University Press. His main areas of interests are philosophy of media, aesthetics and art theory, picture theory, semiotics, hermeneutics, poststructuralism, and theory of language and communication. He has published several books, such as Introduction to Umberto Eco (Hamburg 1993, in German: Einführung zu Umberto Eco); What shows itself: On Materiality, Presence, and Event (Munich 2002, in German: Was sich zeigt: Materialität, Präsenz, Ereignis); Event and Aura: Investigations on Aesthetics of Performativity (Frankfurt/M 2002, in German: Ereignis und Aura: Untersuchungen zu einer Ästhetik des Performativen); Introduction to Media Theory (Hamburg 2006, in German: Medientheorien zur Einführung), Post-Hermeneutics (Berlin 2010, in German: Posthermeneutik) and Ordo ab chao / Order from Noise (Berlin/Zurich 2013).

- Dieter Mersch

- Dieter Mersch

- Dieter Mersch